Hyperparameter tuning can make or break the performance of your AI models. Automating this process saves time, reduces errors, and improves results by systematically testing parameter combinations. Here’s what you need to know:

Automated tuning not only improves model performance but also ensures reproducibility and efficient resource use. Start small, refine your approach, and leverage tools to scale effectively.

Auto-Tuning Hyperparameters with Optuna and PyTorch

How to Automate Hyperparameter Tuning

Automating hyperparameter tuning simplifies what is often a challenging and time-consuming process. It involves three key steps that work together to create a smooth and effective optimization workflow.

Step 1: Define the Search Space

The search space is essentially the range of values your algorithm will test for each hyperparameter. This is where the foundation of your tuning process begins, as it directly impacts the quality of your results and the efficiency of your search.

Start small by focusing on a handful of critical hyperparameters and expand gradually as you gather insights. This keeps your system from being overwhelmed while giving you quick feedback on which parameters are most influential.

Leverage domain knowledge to identify the hyperparameters that matter most. Classify them as either discrete (e.g., the number of layers in a neural network) or continuous (e.g., the learning rate). Each type requires its own sampling method.

Sensitivity analysis can help you zero in on hyperparameters that significantly affect model performance. Many practitioners use a random search initially to explore the broader parameter space and then narrow their focus to the most promising regions.

Once your search space is clearly defined, the next step is to decide on a search strategy.

Step 2: Choose a Search Strategy

Your search strategy determines how you navigate the hyperparameter space, balancing thoroughness, speed, and computational efficiency.

The best method for your project will depend on factors like your computational budget, time constraints, and the complexity of your hyperparameter space. For example, Bayesian optimization is ideal for resource-intensive tasks, while random search works well for quicker, exploratory experiments.

Step 3: Evaluate and Refine Results

Once you've implemented your search strategy, the next step is to evaluate the results and refine your approach. This phase turns raw optimization data into actionable insights to improve your model's performance.

Cross-validation is a cornerstone of reliable evaluation during hyperparameter tuning. By testing your model on multiple data splits, it provides a more accurate picture of performance and helps reduce the risk of overfitting.

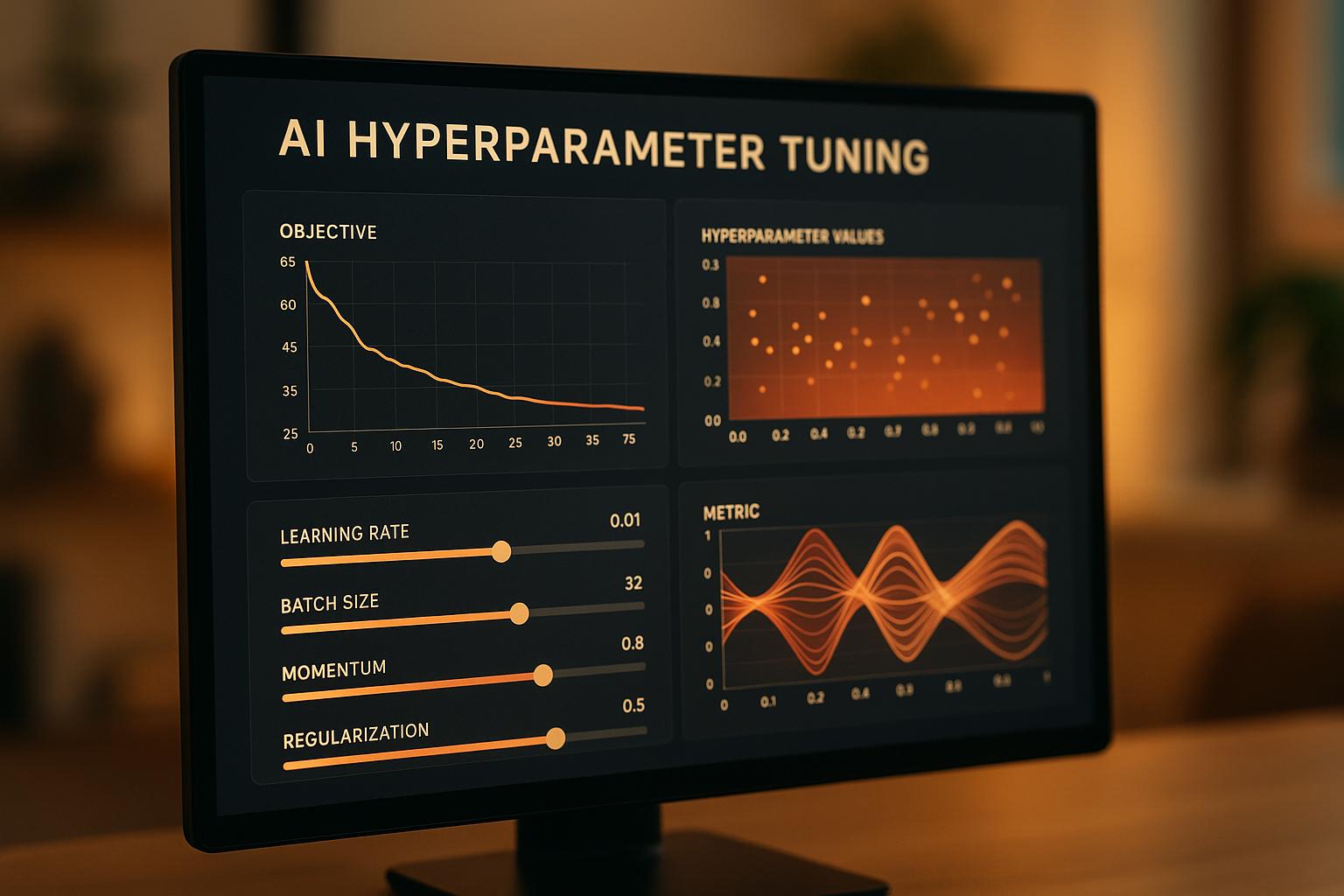

Use visual monitoring tools to track metrics like validation accuracy, training time, and resource usage in real time. These tools can help you spot trends and make adjustments as needed.

Focus on hyperparameters that have the biggest impact on performance. Sensitivity analysis can guide you in prioritizing these parameters while minimizing attention on those with little effect.

To further improve results, incorporate regularization techniques (such as L1 and L2 penalties) to prevent overfitting, and fine-tune these alongside other hyperparameters. Additionally, take advantage of parallel processing to run multiple evaluations at the same time.

For a more dynamic approach, consider iterative methods like successive halving or Hyperband, which allocate more resources to promising configurations and stop underperforming trials early.

Tools and Frameworks for Automated Hyperparameter Tuning

Hyperparameter tuning has become more accessible with tools designed to simplify and automate the process. These tools not only streamline optimization workflows but also make it easier to incorporate hyperparameter tuning into enterprise-level AI projects.

Popular Hyperparameter Tuning Tools

Ray Tune stands out for its ability to handle distributed hyperparameter tuning across multiple machines. It integrates seamlessly with popular machine learning libraries like PyTorch, TensorFlow, and scikit-learn. Whether you're working on simple tasks or tackling complex optimization challenges, Ray Tune offers features like early stopping and population-based training to enhance efficiency.

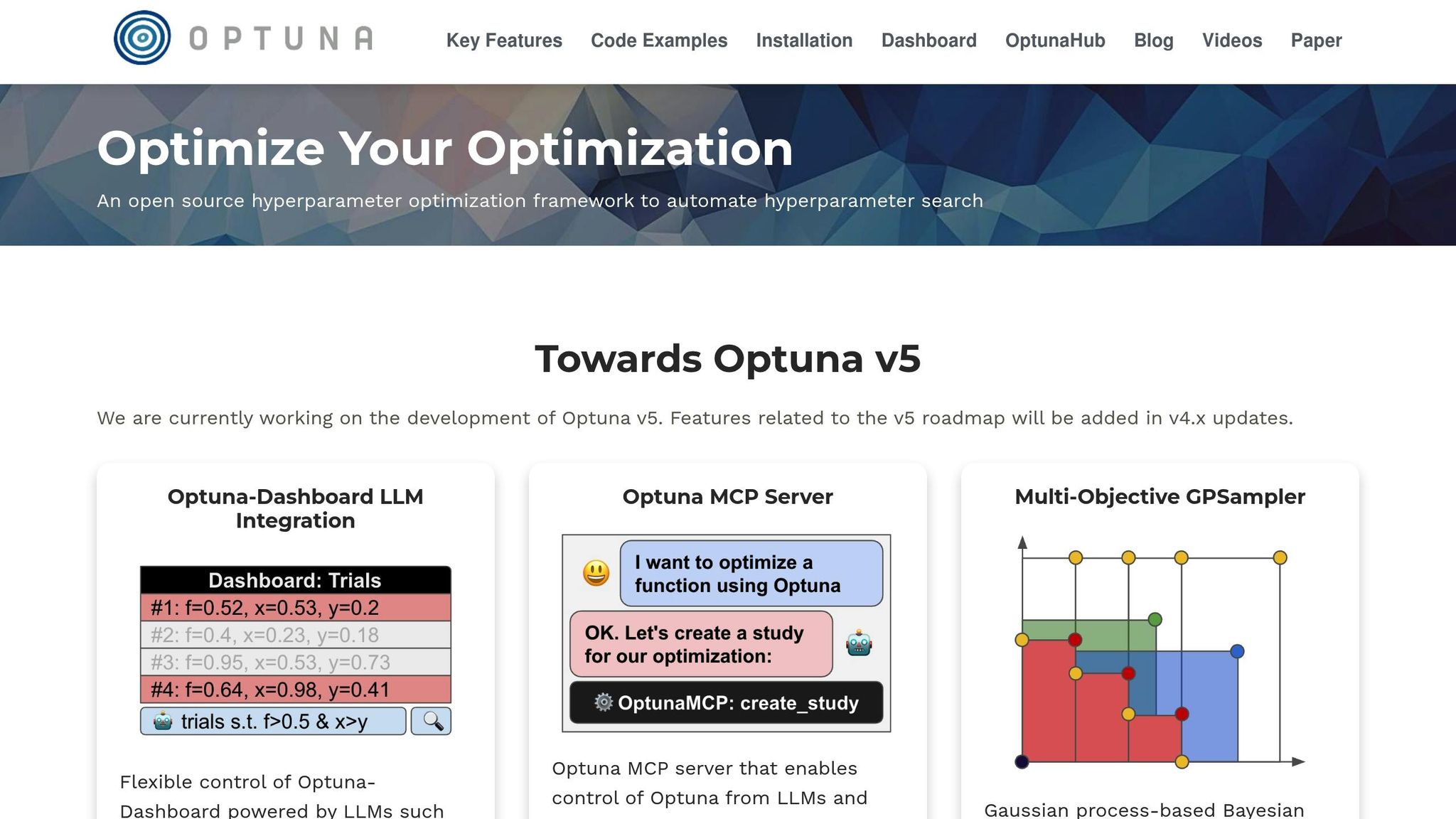

Optuna is a go-to choice for Bayesian optimization. Its intuitive design makes it easy to implement advanced optimization techniques without requiring a deep understanding of the underlying mathematics. Optuna also includes pruning capabilities, which help stop unpromising trials early, saving both time and computational resources.

Hyperopt provides a mature solution for sequential optimization. Using algorithms such as the Tree-structured Parzen Estimator (TPE), it efficiently explores high-dimensional hyperparameter spaces. This makes it an appealing option for teams transitioning from manual tuning processes to automated methods.

Microsoft's Neural Network Intelligence (NNI) is tailored for enterprise needs. It offers features like web-based visualization, robust experiment management, and support for various tuning algorithms. NNI works seamlessly across local setups and cloud clusters, making it a versatile choice for large-scale projects.

Google's Vizier and other cloud-based platforms like AWS SageMaker and Azure Machine Learning provide fully managed hyperparameter tuning services. These platforms handle infrastructure management, allowing teams to focus on model development. They also offer built-in monitoring and scaling capabilities, making them ideal for cloud-based workflows.

Scikit-Optimize is a lightweight option for those already using the scikit-learn ecosystem. With its Gaussian process-based optimization, it’s a great choice for smaller projects or proof-of-concept work, requiring minimal setup and dependencies.

Here’s a quick comparison of these tools to help you decide which one aligns best with your needs:

Tool Comparison Chart

The right tool for your project depends on factors like computational resources, infrastructure, and specific goals. For smaller parameter spaces, grid search remains a simple yet effective option. Random search, on the other hand, works better for continuous parameter ranges. If you’re dealing with computationally expensive model evaluations, Bayesian optimization is a strong choice. For faster results, methods like Hyperband can quickly discard poorly performing configurations, saving valuable time and resources.

Adding Hyperparameter Tuning to Enterprise Workflows

Incorporating automated hyperparameter tuning into enterprise workflows can significantly improve model performance, ensure consistent results, and optimize resource use. While these methods work well on smaller scales, expanding them to enterprise-wide operations demands careful attention to resource management and tailored solutions.

Managing Resources and Ensuring Reproducibility

Efficient resource management is key when scaling hyperparameter tuning. Instead of exhaustively testing every parameter, focus on the ones that have the greatest impact. Start with broad parameter ranges and progressively narrow them down to promising values. This approach strikes a balance between thoroughness and efficiency. To further optimize, leverage distributed resources to run parallel evaluations without exceeding compute capacity, and determine the right number of parallel jobs to maximize efficiency within system limits.

Reproducibility is equally important, especially in collaborative environments where models are developed by multiple teams or need validation across departments. Standard practices like fixing random seeds and maintaining systematic tracking help ensure results can be replicated. Techniques like early stopping, used in methods such as successive halving and Hyperband, can save resources by halting underperforming configurations early . Additionally, robust cross-validation techniques are essential for accurately assessing model performance and reducing the risk of overfitting.

These foundational practices prepare enterprises for customized solutions tailored to their specific needs.

Custom Solutions with Devcore

Beyond standard resource strategies, enterprises often benefit from tailored hyperparameter tuning solutions that align with their unique workflows and objectives. While off-the-shelf tools provide a good starting point, they may not fully address the specific demands of large-scale business operations.

Devcore specializes in creating custom automation systems that streamline hyperparameter tuning and enhance AI capabilities across enterprise operations. Using their proprietary Leverage Blueprint™ methodology, Devcore identifies key areas where automated tuning can deliver the most value, improving both performance and business outcomes.

Customizing hyperparameter tuning to specific tasks can maximize model performance and return on investment. These solutions are designed to integrate seamlessly with existing data pipelines, meet compliance requirements, and support ongoing performance monitoring. With APIs, enterprises can quickly connect these systems to existing tools, enabling custom dashboards and workflow optimization.

Scalability is another critical factor. Custom solutions are built to handle growing data volumes, increasing model complexity, and rising computational demands without requiring major system overhauls. This ensures that hyperparameter tuning processes can adapt as the business evolves. When choosing an AI development partner, consider factors like their technical expertise, industry knowledge, scalability capabilities, and commitment to data privacy. Enterprises can opt for faster deployment by configuring existing tools or invest in fully customized solutions that align precisely with their strategic goals.

Measuring and Improving Performance

Hyperparameter tuning is most effective when it’s backed by the right metrics and a clear connection to business goals. By focusing on meaningful measurements, you can ensure your tuning efforts deliver tangible value.

Setting Metrics and Goals

To bridge the gap between technical optimization and business impact, start by defining performance metrics that align with your objectives. Choose metrics that directly reflect how your model will perform in real-world scenarios. For instance:

Cross-validation plays a key role here. By testing your model across multiple data splits, you ensure that your chosen metrics truly represent performance and that your tuned hyperparameters will generalize well to unseen data.

Beyond primary metrics, don’t overlook secondary objectives that fit your business needs. These might include training time, memory usage, model interpretability, or inference speed. For example, optimizing for shorter training times or reduced memory consumption can help cut unnecessary computational costs. Domain-specific insights can also sharpen your metric selection - such as emphasizing false negatives over false positives in credit risk models, where missing a high-risk case could be costly.

Reading Results and Creating Business Value

Once you’ve measured performance, the next step is turning those results into actionable insights. This means going beyond the final metric scores to understand how hyperparameter changes affect your model’s behavior. Visualization tools like scatter plots, heat maps, and parallel coordinate plots can uncover patterns, such as interactions between parameters or diminishing returns.

For more complex relationships, advanced techniques like PCA (Principal Component Analysis), t-SNE, or surrogate models can simplify the analysis. For example, in one case, Bayesian optimization reduced validation error by 15% with a small learning rate adjustment, outperforming traditional grid search methods. Similarly, financial analysts discovered that increasing the number of decision trees beyond a certain point only marginally improved performance but significantly increased training time. This insight allowed them to pinpoint an optimal configuration that balanced robust predictions with computational efficiency.

Continuous monitoring is essential to keep models performing well as data patterns shift. Implement systems that track key metrics and flag performance drops. Over time, a culture of learning from each tuning iteration and incorporating advanced statistical methods will support ongoing improvement.

Finally, tie technical results to business outcomes. Whether it’s a slight accuracy increase that prevents fraud-related losses or faster inference times enabling real-time customer recommendations, showing the direct impact of tuning on business metrics is key. For instance, in credit risk assessment, fine-tuning hyperparameters like learning rate, estimators, and depth in gradient boosting models can reduce financial risks and improve decision-making. By aligning model performance with company goals, hyperparameter tuning becomes a powerful tool for driving strategic success.

Conclusion

Automating hyperparameter tuning has reshaped how AI systems are optimized, offering clear, measurable advantages. As Samuel Hieber explains:

"As AI development becomes more resource-intensive and competitive, hyperparameter tuning is proving essential to building smarter, faster, and more cost-effective models".

The real-world impact is hard to ignore. For instance, Uber reported a 15% boost in prediction accuracy for their demand forecasting models through systematic hyperparameter optimization, leading to improved operational efficiency and better customer experiences. On a broader scale, automated tuning can reduce AI learning costs by up to 90% while significantly shortening the time needed to achieve peak model performance. These outcomes highlight the competitive advantage automation brings to the table.

Unlike manual methods, automated tuning eliminates guesswork, enhances reproducibility, and makes better use of resources. It allows for fast exploration of hyperparameter spaces and can handle the complexity of modern models that manual tuning simply cannot manage. With 65% of organizations already using or experimenting with AI technologies, and industries like IT and aerospace reporting adoption rates of 83–85%, automated hyperparameter tuning is no longer a luxury - it's a necessity.

To get started, begin with smaller hyperparameter subsets, leverage cross-validation, and closely monitor your results. Thanks to advancements in cloud infrastructure and techniques like Bayesian optimization, evolutionary algorithms, and hybrid methods, these tools are more accessible than ever. These innovations represent the next step in optimizing AI systems effectively.

FAQs

What are the advantages of automated hyperparameter tuning compared to manual tuning?

Automated hyperparameter tuning brings clear benefits when compared to manual methods, particularly in speed and precision. By using algorithms to navigate the hyperparameter space, it can pinpoint the best configurations much faster and with greater thoroughness than manual efforts.

In contrast, manual tuning is a labor-intensive process that often suffers from human error, resulting in less-than-ideal outcomes. Automated techniques not only save a significant amount of time but also enhance model performance, especially when working with intricate AI systems or massive datasets.

What challenges might arise when using Bayesian optimization for hyperparameter tuning?

Bayesian optimization is a widely-used approach for hyperparameter tuning, but it’s not without its hurdles. One of the primary challenges is that it can be resource-intensive, especially when working with large datasets or highly complex models. This often translates to longer processing times and higher computational demands.

Another issue arises in high-dimensional hyperparameter spaces, where Bayesian optimization can struggle to find optimal solutions efficiently. This difficulty can lead to slower progress in the tuning process, particularly when the search space becomes overwhelmingly large.

Additionally, Bayesian optimization depends on prior assumptions about the search space. If these assumptions don’t align well with the problem at hand, the method’s effectiveness can diminish. In cases involving very large or constantly changing hyperparameter spaces, other tuning methods might yield better results.

Even with these limitations, Bayesian optimization continues to be a go-to tool for many AI systems. When used alongside the right strategies and sufficient resources, it can significantly enhance the performance of machine learning models.

How can businesses ensure automated hyperparameter tuning is both scalable and consistent in large AI projects?

To scale and maintain consistency in automated hyperparameter tuning for large-scale AI projects, businesses should consider using distributed tuning frameworks. These frameworks are designed to handle the challenges of high-dimensional parameter spaces and massive datasets, making the tuning process more efficient.

Another key component is provenance tracking. By documenting every step of the tuning process, teams can ensure reproducibility and maintain consistency over time - an essential factor for long-term success.

Adopting MLOps best practices can also make a significant difference. Practices like automation, parallelization, and continuous monitoring help streamline workflows and optimize resource usage. Together, these strategies not only improve reproducibility but also provide the scalability needed to tackle enterprise-level AI demands effectively.

Related posts

- Manual Workflows Slowing Growth? AI Solutions

- Ultimate Guide to Scaling Business with Automation

- How AI Enhances Real-Time Workflow Reporting

- How AI Improves Data Backup and Recovery

{"@context":"https://schema.org","@type":"FAQPage","mainEntity":[{"@type":"Question","name":"What are the advantages of automated hyperparameter tuning compared to manual tuning?","acceptedAnswer":{"@type":"Answer","text":"<p>Automated hyperparameter tuning brings clear benefits when compared to manual methods, particularly in <strong>speed</strong> and <strong>precision</strong>. By using algorithms to navigate the hyperparameter space, it can pinpoint the best configurations much faster and with greater thoroughness than manual efforts.</p> <p>In contrast, manual tuning is a labor-intensive process that often suffers from human error, resulting in less-than-ideal outcomes. Automated techniques not only save a significant amount of time but also enhance model performance, especially when working with intricate AI systems or massive datasets.</p>"}},{"@type":"Question","name":"What challenges might arise when using Bayesian optimization for hyperparameter tuning?","acceptedAnswer":{"@type":"Answer","text":"<p>Bayesian optimization is a widely-used approach for hyperparameter tuning, but it’s not without its hurdles. One of the primary challenges is that it can be <strong>resource-intensive</strong>, especially when working with large datasets or highly complex models. This often translates to longer processing times and higher computational demands.</p> <p>Another issue arises in high-dimensional hyperparameter spaces, where Bayesian optimization can <strong>struggle to find optimal solutions efficiently</strong>. This difficulty can lead to slower progress in the tuning process, particularly when the search space becomes overwhelmingly large.</p> <p>Additionally, Bayesian optimization depends on prior assumptions about the search space. If these assumptions don’t align well with the problem at hand, the method’s effectiveness can diminish. In cases involving very large or constantly changing hyperparameter spaces, other tuning methods might yield better results.</p> <p>Even with these limitations, Bayesian optimization continues to be a go-to tool for many AI systems. When used alongside the right strategies and sufficient resources, it can significantly enhance the performance of machine learning models.</p>"}},{"@type":"Question","name":"How can businesses ensure automated hyperparameter tuning is both scalable and consistent in large AI projects?","acceptedAnswer":{"@type":"Answer","text":"<p>To scale and maintain consistency in automated hyperparameter tuning for large-scale AI projects, businesses should consider using <strong>distributed tuning frameworks</strong>. These frameworks are designed to handle the challenges of high-dimensional parameter spaces and massive datasets, making the tuning process more efficient.</p> <p>Another key component is <strong>provenance tracking</strong>. By documenting every step of the tuning process, teams can ensure reproducibility and maintain consistency over time - an essential factor for long-term success.</p> <p>Adopting <strong>MLOps best practices</strong> can also make a significant difference. Practices like automation, parallelization, and continuous monitoring help streamline workflows and optimize resource usage. Together, these strategies not only improve reproducibility but also provide the scalability needed to tackle enterprise-level AI demands effectively.</p>"}}]}